How to Stream Files Content Directly to the Browser

- Shahaf Sages

- Streaming

- 17 Dec, 2023

As part of a solution I implemented based on Generative AI and RAG (Retrieval Augmented Generation) , it was required to show large files efficiently directly in the browser. Traditional methods of loading and serving files can be slow and resource-intensive, leading to performance issues.

In this blog post, we'll explore how to build a file streaming application using the powerful combination of FastAPI and NextJS, addressing these challenges and achieving some impressive results.

Let's get started!

The Challenge

The web application enabled users to view the files from which citations came per the chat AI response. Traditionally, you might read the entire file into memory on the server before serving it to the client. This approach can be problematic for several reasons:

-

High memory consumption: Large files can quickly consume available memory, leading to crashes and performance issues.

-

Slow loading times: Users have to wait for the entire file to be downloaded before they can start viewing it.

-

Inefficient resource utilization: Server resources are unnecessarily allocated even if only a small portion of the file is being viewed.

Show it as soon as possible

Fortunately, there's a better way! By using FastAPI for the backend and NextJS for the frontend, we can implement file streaming, offering several key benefits:

- Improved performance: By reading and sending the file in chunks, we significantly reduce memory consumption and improve loading times.

- Enhanced responsiveness: Users can start viewing the file as soon as the first chunk arrives, leading to a more responsive user experience.

- Efficient resource utilization: Resources are only used for the portion of the file being viewed, leading to better server utilization.

Implementation Highlights

Here's a breakdown of the key implementation aspects:

FastAPI Backend:

- A FastAPI application is created with an endpoint that accepts a filename as a path parameter.

- The endpoint checks if the file exists and returns an error if not.

- A generator function reads the file chunk by chunk and yields each chunk.

- A StreamingResponse object is returned with the content, media type, and appropriate headers.

NextJS Frontend:

- The React framework is used to build the user interface.

- The fetch API is used to send a request to the backend API endpoint.

- The response is converted to a blob and then read using a Response Reader.

- Once the file data is loaded, it is displayed in the user interface.

Getting Started

Start by creating a folder called simple_file_streaming_application which will be used as the root folder for our application.

Backend Creation with FastAPI

Prerequisites

To successfully complete this tutorial in VS Code, you first need to set up your Python development environment.

- Python 3

- Python extension for VS Code (For additional details on installing extensions, you can read Extension Marketplace).

Create a folder called backend and navigate to it.

Create a requirements.txt file that lists the dependencies we wish to install for the backend service.

We will install FastAPI for creating the app and uvicorn to work as the server. Add the following content to the requirements.txt file:

fastapi

uvicorn

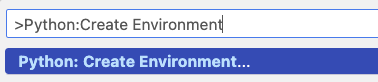

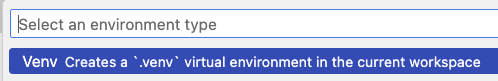

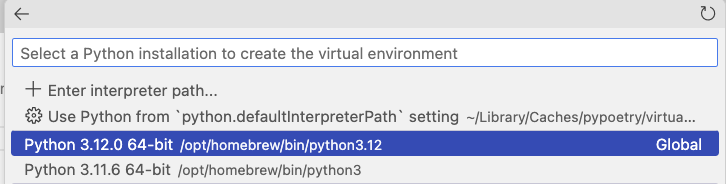

Create a virtual environment by opening the Command Palette (⇧⌘P) in VScode and run the Python: Create Environment command.

When asked for the environment type, select Venv.

Then select the latest version of Python available on your machine.

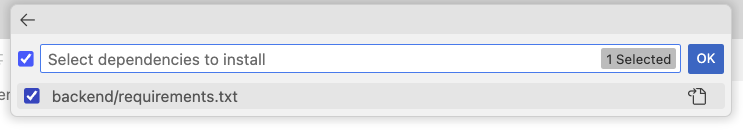

Select the requirements.txt file from the dropdown list, so the dependencies are automatically installed, and then select OK

The virtual environment will be created (this can take a few minutes), the dependencies automatically installed, and the environment selected for your workspace to be used by the Python extension. You can confirm it's been selected by seeing a message such as this:

Hello World Endpoint

Inside the backend folder, create a new Python file called main.py. Add the following starter code:

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

def root():

return {"message": "Hello World"}

Open the terminal and make sure to be in the venv mode i.e the prompt starts with (.venv).

Navigate to the backend folder, and run the command:

uvicorn main:app --reload

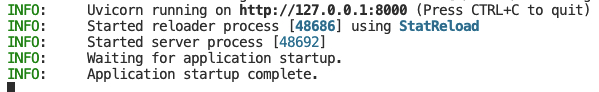

You should see something similar to this in the terminal:

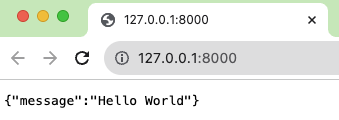

Command click the “http://127.0.0.1:8000” URL in the terminal to open your default browser to that address:

Congratulations! Your FastAPI app is up and running!

File loading and Streaming

Create a folder called files inside the backend folder:

simple_file_streaming_application

|_ backend

|_ files

Add the following code in the bottom of main.py with following code responsible for loading the file content:

def load_file_content(file_path: str):

with open(file_path, "rb") as file:

while True:

chunk = file.read(1024)

if not chunk:

break

yield chunk

This code will create a generator function which using the "yield" opertor creates an iterator to get chunks of the file data with size of 1024 bytes.

Create a new endpoint with the route “/file/{file_name}” which in turn will call the load_file_content function:

import os

from fastapi import FastAPI, HTTPException

from fastapi.responses import StreamingResponse

app = FastAPI()

@app.get("/file/{file_name}")

def stream_file(file_name: str):

file_path = os.path.join(os.getcwd(), "./files", file_name)

if not os.path.exists(file_path):

raise HTTPException(status_code=404, detail="File not found.")

return StreamingResponse(

content=load_file_content(file_path=file_path),

media_type="application/pdf"

)

Notice the usage of StreamingResponse, which gets a generator function thus can transfer the content in a stream. in this example we use the media_type to be "application/pdf" since we are passing a pdf file. In a more general use case the media type should be detected dynamically depending on the actual file loaded.

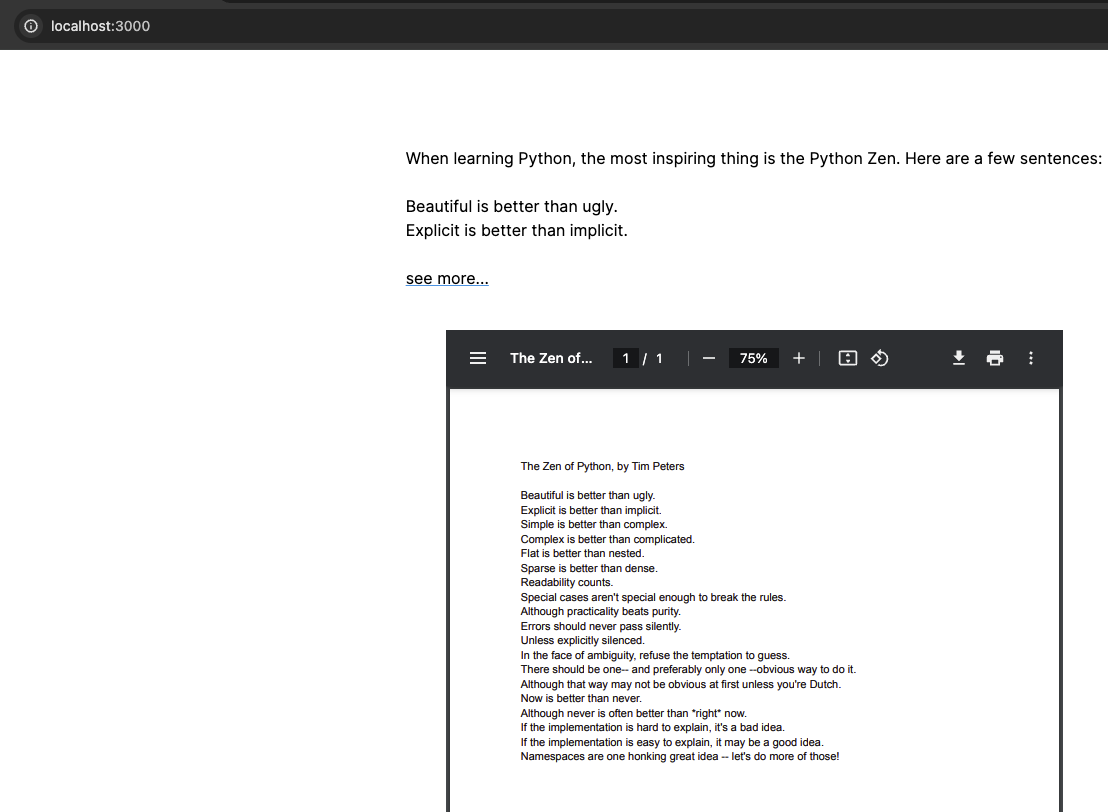

Inside the backend/files folder place your favorite pdf file, as an example we use a file called “the_zen_of_python.pdf”. Guess what the file content is it about :)

Open a tab in the browser to following URL http://127.0.0.1:8000/file/the_zen_of_python.pdf.

Cool! here is the pdf file content streamed to your browser.

If you wish to make sure the data is streamed with chunks,if you are using chrome, it is possible to view in the developer tools, under the network tab, the transfer-encoding is “chunked” and look for the following:

Server: uvicorn

Transfer-Encoding: chunked

Fronted Creation with Next.js

Prerequisites

- Node.js LTS installed (This example using Node version v18.18.2)

- NPM installed (This example using npm version 9.8.1)

Creating the Service

Open a new terminal (CTRL+SHIFT + `).

Navigate to the root folder of our project.

Note: if the terminal is opened in the python venv, just type “deactivate” to exit the venv mode.

To create a new Next.js app In the terminal run the following command:

npx create-next-app@latest frontend --typescript --tailwind --eslint

This command will create a new next.js project called frontend with typescript configuration, using tailwind styling and eslint for linting.

Answer the installation questions as following:

➜ file_streaming_application npx create-next-app@latest frontend --typescript --tailwind --eslint

Need to install the following packages:

create-next-app@14.0.4

Ok to proceed? (y) y

✔ Would you like to use `src/` directory? … No / Yes

✔ Would you like to use App Router? (recommended) … No / Yes

✔ Would you like to customize the default import alias (@/*)? … No / Yes

Creating a new Next.js app in /file_streaming_application/frontend.

After installation completes you should see some similar message to this:

Success! Created frontend at /file_streaming_application/frontend

From the terminal navigate to the frontend folder and run the command

npm run dev

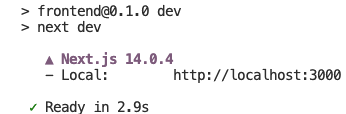

You should see a similar output in the terminal:

click the URL http://localhost:3000 in the terminal, which should open the default browser.

Once the browser load and all worked correctly you should see something like this:

Success! You have a next.js server running locally.

Consuming The Backend File streaming Endpoint

Navigate to frontend/src/app folder. Inside create a new folder called api. Inside create another folder called citations.

simple_file_streaming_application

|_ backend

|_ files

|_ frontend

|_src

|_ app

|_ api

|_ citations

|_ route.ts

Inside create a file called route.ts with the following code:

'use server';

import { NextRequest } from 'next/server';

export async function GET(req: NextRequest) {

const fileName = req.nextUrl.searchParams.get('file_name');

const response = await fetch(`http://127.0.0.1:8000/file/${fileName}`);

if (!response.ok) {

return new Response('File not found.', {

status: 404,

});

}

const mimeType = 'application/pdf';

return new Response(response.body, {

status:200,

headers: new Headers({

'content-type':mimeType

})

})

}

This will create a GET REST API endpoint which will receive the filename in the request query parameters, consume the file contents from the backend using fetch and then forward the streamed content to an endpoint at http://localhost:3000/api/citations?file_name=[file name] also as a stream.

Place your facvorite PDF file or download the one from the example here the_zen_of_python.pdf

In the browser enter the following URL http://localhost:3000/api/citations?file_name=the_zen_of_python.pdf

Success! You should see the pdf file appear.

Showing the File from the citation

Replace the whole content frontend/src/app/page.tsx with the following code:

'use client';

import { useState } from 'react';

const citation_url_prefix = 'http://localhost:3000/api/citations?file_name=';

export default function Home() {

const [showCitation, setShowCitation] = useState(false);

const [citationLink, setCitationLink] = useState<string>();

const handleClickCitation = (fileName: string) => {

setShowCitation((prev) => !prev);

setCitationLink(citation_url_prefix + fileName);

};

return (

<main className='flex min-h-screen flex-col items-center justify-between p-24 w-screen'>

<div className='h-max'>

<ul>

<li>

<p className='break-normal'>

When learning Python, the most inspiring thing is the

Python Zen. Here are a few sentences:

</p>

<br/>

<p>Beautiful is better than ugly.</p>

<p>Explicit is better than implicit.</p>

<br/>

<p

className='underline decoration-sky-500 cursor-pointer'

onClick={() => {

handleClickCitation('the_zen_of_python.pdf');

}}

>

see more...

</p>

</li>

</ul>

<div>

{showCitation && (

<iframe src={citationLink} className='h-[1000px] w-full p-10' />

)}

</div>

</div>

</main>

);

}

Replace the whole content of frontend/src/app/globals.css with the following code:

@tailwind base;

@tailwind components;

@tailwind utilities;

:root {

--foreground-rgb: 0, 0, 0;

--background-start-rgb: 214, 219, 220;

--background-end-rgb: 255, 255, 255;

}

@media (prefers-color-scheme: dark) {

:root {

--foreground-rgb: 255, 255, 255;

--background-start-rgb: 0, 0, 0;

--background-end-rgb: 0, 0, 0;

}

}

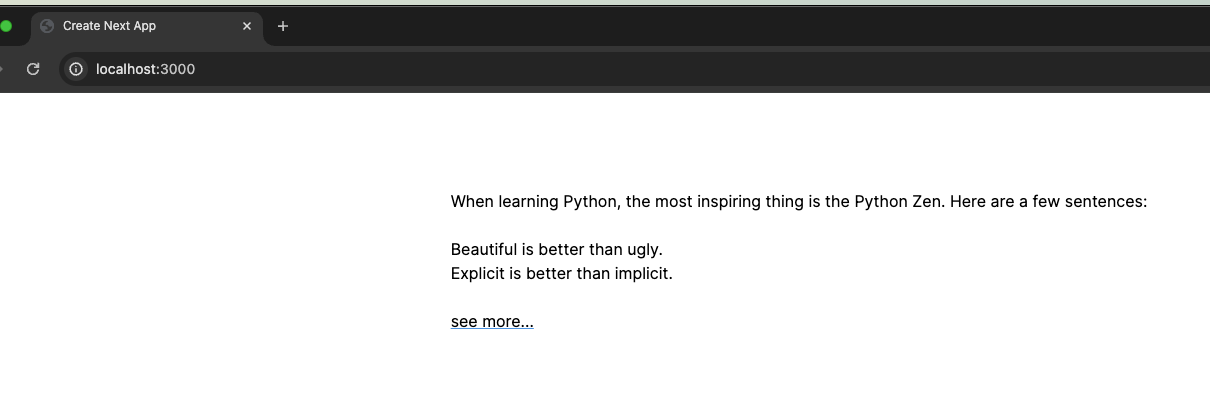

Browsing to http://localhost:3000/ should show something like this:

Click on see more.... It might take a while, and it's worth adding a spinner, but once the first chunk is loaded you should already see the content of the pdf file:

Congratulations you made it! Great work!

Achievements

By implementing this solution, we've achieved tangible benefits:

- Reduced memory consumption: The streaming approach significantly reduces memory usage compared to traditional methods.

- Improved loading times: Users experience faster loading times as they don't have to wait for the entire file to download.

- Enhanced user experience: The user interface becomes more responsive and interactive.

- Efficient resource utilization: Server resources are used more efficiently, leading to cost savings.

Conclusion

Building a file streaming application with FastAPI and NextJS is a powerful approach for handling large files. This solution offers significant performance improvements, a better user experience, and efficient resource utilization. If you're looking to optimize your application's handling of large files, consider implementing this powerful combination of technologies.

Thank you!

Resources

Here is the Gihub repo with the full code.

Main photo by Vlada Karpovich